Takeaways from Google File System!

GFS is distributed file system that stores and processes large amounts of distributed data intensive applications data on a storage cluster of commodity servers.

NOTE: This article is brief and by no means a comprehensive in-depth look into the workings of GFS! This post will be updated over time with more content.

Why GFS?

Single node file systems have:

- Limited storage, processing power, I/O operations throughput

- Vertical scaling can only help so much

- Single point of failure

Requirements for a new file system that will solve the above problems:

- Scalable - need to support hundreds of terabytes of data

- Fault tolerance - availability and data integrity should not be compromised by component failures

- Durability - data should not be lost unless deleted by the user

- Easy operational management - data replication, garbage collection, snapshots etc.

- High throughput over low latency for large datasets

- GFS is optimize for append operations rather than overwriting, for example, storing application logs

- Relaxed consistency model i.e more appends than random writes

Architecture

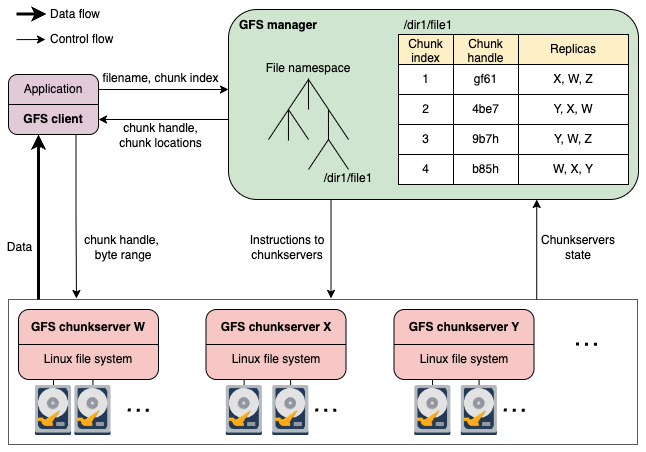

Manager node

- Assigns each chunk a 64 bit globally unique ID and assigns chunk servers where the chunk is stored and replicated

- System metadata, including namespaces, file-to-chunk mapping and chunk location

- Stores metadata in memory for performance, but also stores it in an operation log placed on managers hard disk for durability

- Data replication and re-balancing

- Operational locks to ensure data consistency

- Garbage collection of the deleted data

Chunk servers

Commodity storage servers that store chunks as plain linux files

How is the manager node scaled and tolerates any failures?

- Data doesn’t flow through the manager node for less overhead on manager node

- Secondly, the chunk size is kept large to reduce the metadata requests on the manager

- The large chunk size helps reduce the network overhead by maintaining a persistent connection with the chunkserver until we are done with reading/writing that chunk completely or the connection duration expires

- Keeping the metadata in memory and serving the clients from there

- Caching the metadata on the client side

Random reads - accessing random pages of a large text files

- Client can’t cache chunk locations as the chunks are random

- Performance concerns arise from small random reads. Applications that prioritize performance frequently batch and sort their small reads in order to progress continuously through the file as opposed to back and forth.

Large streaming reads - playing a video without interruption

- More efficient as the chunk location is cached

- Large streaming reads and sequential writes are the more common cases for targeted applications.

Deep Dive

Each chunk is 64 MB in size and is identified with a unique ID called a chunk handle. Three replicas for each chunk are created for reliability.

Scalability and fault tolerance

- Heartbeat messages b/w manager and chunkservers for health, storage left, and chunk placement.

- GFS uses a monitoring system that runs outside the cluster to monitor the machines inside the cluster.

- Internal fragmentation is caused if the file size is less than the chunk size:

Lazy space allocation prevents the waste of storage space caused by internal fragmentation. It does this by postponing the physical space allocation for a chunk until enough data has accumulated.

Hadoop Distributed File System (HDFS), changed the chunk size to 128 MB according to their needs.

The in-memory metadata might be lost in case of the manager’s failure. A persistent metadata record is kept on the manager’s hard disk by logging the metadata modifications in an operation log.

Create Operation

- A read lock is acquired on the directory name (full path to the directory) to make sure that the directory in which the file is being created is not deleted or renamed by another client during the file creation operation.

- A write lock is acquired on the file name (full path to the file) to serialize the creation of two or more files with the same name.

- We can acquire a read lock on a region that is already read-locked but we can’t acquire a write lock on a region that is already write-locked.

Write Operation

Two Types:

- Random writes

- Append writes

Manager nodes picks a primary replica to write to.

- Since the manager has the authority to choose the primary replica for the clients, it grants a lease to a healthy replica, not a failed replica.

- Serialization is required to cope with the data inconsistency issue among the replicas shown above. It is challenging to serialize write operations across multiple replicas. The manager has to do a lot of work to manage all this. So, the manager uses the lease mechanism to reduce this management overhead.

- With a lease, all the write operations are carried out on a single replica at a time, which makes it easy to serialize operations on a single chunkserver.

Delete and Snapshots

- A File is marked for deletion in the manager node (the namespace ) and immediately returned (the delete request) to client.

- Garbage collection later deletes the file from different chunk serves and replicas.

We don’t need to duplicate the data unless it has changed. The manager node in the GFS duplicates the metadata for the snapshot file. In this case, a chunk handle in the metadata will be pointing to two different files (more than two if more snapshots of the same file are created).

Suppose a client requests a write operation on the chunk that is pointing to more than one file in the metadata. In that case, the manager first generates a new chunk handle, duplicates the chunk data for it, and then performs the write operation on the chunk associated with the requested operation in the metadata.

- The mechanism above is called copy-on-write (COW). This idea is borrowed from Linux’s fork system call.

Consistency

- GFS guarantees data consistency. GFS doesn’t provide a strong consistency model to achieve good performance and scalability for client operations

- The guarantees are provided in terms of defined, undefined, consistent, and inconsistent regions

- These guarantees show that GFS is more suitable for append operations. So, GFS is a good solution for applications that append data to files, like logging systems, web crawlers, MapReduce, etc.

Data Corruption

Component failures are common and can cause data corruption. On reads, the chunkservers identify the corrupt data by verifying checksums maintained by the manager node.

For metadata, the GFS provides a strong consistency model so that the system works normally in case of a single manager’s failure