Cassandra in less than 5 mins

The database discussion section of a system design interview is often the crux of most interview questions. In my experience, for most systems that do not have ACID requirements, NoSQL database is an appropriate choice. Of course, this is in the context of system design interviews and the real world scenario might require specific database solutions.

Traditionally, you always start with what type of database you are going to be using. But before you can even thing about the type of database to use (relational vs non-relational), try thinking in terms of the data.

Specifically ask yourself: (not an exhaustive list, will keep updating this list.)

- What kind of data are we dealing with (metadata, multimedia etc)

- What are some of the common data access patterns?

- Read heavy vs write heavy

- What do we need to optimize in this system?

- Read optimized or write optimized

- Storage cost

- ACID guarantees

- Consistency vs Availability

- Do we need to optimize for range queries?

- Time series data?

- Replication - leaderless, single leader, multi leader etc.

- Sequential reads or writes?

Cassandra

- Column oriented database (also known as wide column database)

- Supports high read and write throughput (explanation below)

- Highly available and scalable (horizontally scalable)

Use Cases

Write heavy applications

- If data is generally self contained, and only needs to be fetched with other data from its partition - can also use arrays, sets in rows

- Examples:

- Sensor readings (same partitioning key for the same sensor),

- Chat messages (chatID - partition key, timestamp - ordering key),

- User activity tracking

Storage Engine (what makes Cassandra fast)

CommitLog The writes first go to WAL (write ahead log) or CommitLog before being written to a disk (SSTable).

Why not directly update the write to the disk instead of updating WAL?

- WAL updates are cheaper as it’s append-only and doesn’t require any restructuring of data on disk.

- This also provides durability in the case of unexpected shutdown. On startup, any mutations in the commit log will be applied to memtables.

There are two primary tree data structures used in database: B-Trees and LSM Trees Cassandra uses LSM trees:

- Writes are extremely fast as they are first sent to memory.

- Depending on how often log compaction is performed, frequent updates or deletes of a row can take up a lot of extra disk space and cpu resources

- Tombstones for deletion further optimizes writes

- performance of reads can a bit slower compared to B-trees.

- Cassandra uses bloom filters as an optimization (approximates the contents of a set)

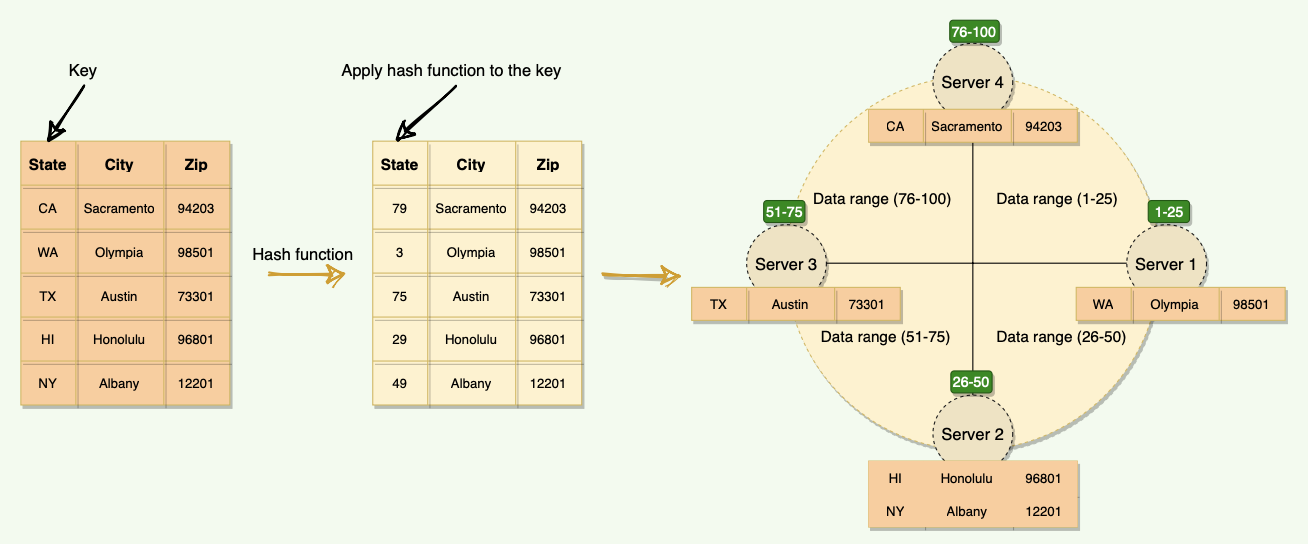

Partitioning

- Cassandra partitioning key in conjunction with consistent hashing (optimized re-balancing when nodes are deleted or added to a cluster) to determine which replica to send a given row to.

- Each shard is replicated with the number of replicas specified by the user.

- Adding / removing nodes will cause the recomputing tokens - some nodes can become hotspots as it will take on broader range.

- Re-balancing and distribution of data results in moving a lot of data.

- VNodes are used to spread the load more evenly across the physical nodes on the cluster by dividing the hash ranges into smaller sub-ranges. This speeds up the re-balancing process after adding or removing nodes.

Replication

Leaderless replication

- Every single write is sent to every single replica for a given partition (as opposed to one leader node) and the admin can choose how many replicas need to respond with a success before returning to the client (can use quorums).

- Client sends writes to one coordinator node in cassandra cluster

- This means that there will be inevitably be conflicting writes

- last write wins (most recent timestamps)

- Clocks are not reliable, can use something like NTP (network time protocol)

- Read repair on reads, if client sees a replica with an outdates value it will update it

- Anti-entropy process running in background ensures eventual consistency of replicas - eventual consistency

- If for some reason replicas cannot handle writes in a given moment, the coordinator will store the write to be sent to them later, and then perform a hinted handoff

- last write wins (most recent timestamps)

Fault Tolerance

- Data stored on replicas:

- Each replica added should allow read and write throughput to scale linearly.

- Customizable replication topology - number of replicas in addition to location such as different rack or data center.

- Faults detected via gossip protocol

- Nodes keep track of heartbeats received by other nodes in the cluster and their timestamp and send these via gossip, if a node has not gossiped in a long time it is presumed dead

Hinted Handoff

- When a node is down or does not respond to a write request, the coordinator node writes a hint in a text file on the local disk. This hint contains the data itself along with information about which node the data belongs to.

- When the coordinator node discovers from the Gossiper that a node for which it holds hints has recovered, it forwards the write requests for each hint to the target.

- Cassandra can fix this stale data while serving a read request. Cassandra can issue a Read Repair when it sees stale data

Indexes

Cassandra uses the clustering keys to create indexes of the data within a partition. - Note that these are only local indexes not global indexes. If you have many clustering keys in order to achieve multiple different sort orders, Cassandra will de-normalize the data such that it keeps two copies of it, slow down writes - Secondary indexes are not global.

Conclusion

- Ideal for write-heavy applications with millions or billions of users, where performance is a primary concern (e.g., messaging, sensor readings, activity tracking).

- Main pitfalls include a lack of strong consistency and the inability to support data relationships beyond sorting within a partition.